Underlying Principles of Parallel and Distributed Computing System

- The terms parallel computing and distributed computing are used interchangeably.

- It implies a tightly coupled system.

- It is characterised by homogeneity of components (Uniform Structure).

- Multiple Processors share the same physical memory.

Parallel Processing

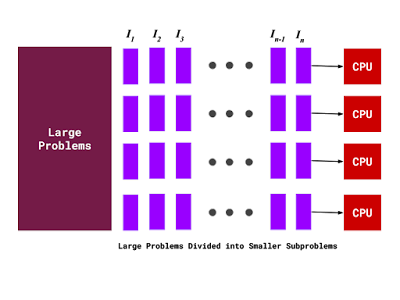

- Processing multiple tasks simultaneously in multiple processors is called parallel processing.

- Parallel program consists of multiple processes (tasks) simultaneously solving a given problem.

- Divide-and-Conquer technique is used.

Applications for Parallel Processing

- Science and Engineering

- Atmospheric Analysis

- Earth Sciences

- Electrical Circuit Design

- Industrial and Commercial

- Data Mining

- Web Search Engine

- Graphics Processing

Why to use parallel processing

- Save time and money: More resources at a task will shorten its time for completion, with potential cost savings.

- Provide concurrency: Single computing resources can only do one task at a time.

- Serial computing limits: Transmission speeds depend directly upon hardware.

Parallel computing memory architecture types

- Shared memory architecture

- Distributed memory architecture

- Hybrid distributed shared memory architecture

1. Shared Memory Architecture

- All processes access all memory as a global address space.

- Multiple processors can operate independently but they share same memory resources available.

- Change in my location - affected by processor.

- Shared memory is classified into two types,

- Uniform Memory Access (UMA)

- Non-Uniform Memory Access (NUMA)

Uniform Memory Access (UMA)

- It is represented by symmetric multiprocessor machines

- Identical processes equal access and access time to memory

Non-Uniform Memory Access (NUMA)

- It can directly access the memory of another SMP.

- Equal access time to all memories.

- Memory access across the link is slower.

- It is a global address space.

- User friendly programming prospective to memory.

- Data sharing between tasks is both fast and uniform due to the memory CPU.

- Scalability between memory and CPU

- Increases traffic associated with cache or memory.

- Synchronisation constructs the correct access to global memory.

2. Distributed Memory Architecture

- A distributed system is a collection of large amount of independent computers that appear to its users as a single coherent system.

- Distributed Systems have their own local memory

- Memory is scalable with the number of processors

- Processes can be accessed rapidly with its own memory without any interference

- Cost effective use of commodity processors and networking

- Disadvantages

- The programmer is responsible for many of these details associated with data communication between processes

- Non-uniform memory access time data residing in a remote node to access local data

3. Hybrid distributed shared memory

- The shared memory component can be a shared memory machine or Graphics Processing Unit (GPU).

- Distributed memory components used in networking of multiple shared memory on GPU machines

Advantages

- Increase scalability.

- Whatever is common to both shared and distributed memory architecture.

Disadvantages

- Increased programmer complexity is an important disadvantage.

إرسال تعليق